Instant environment perception from a mobile platform with a new generation geospatial database background

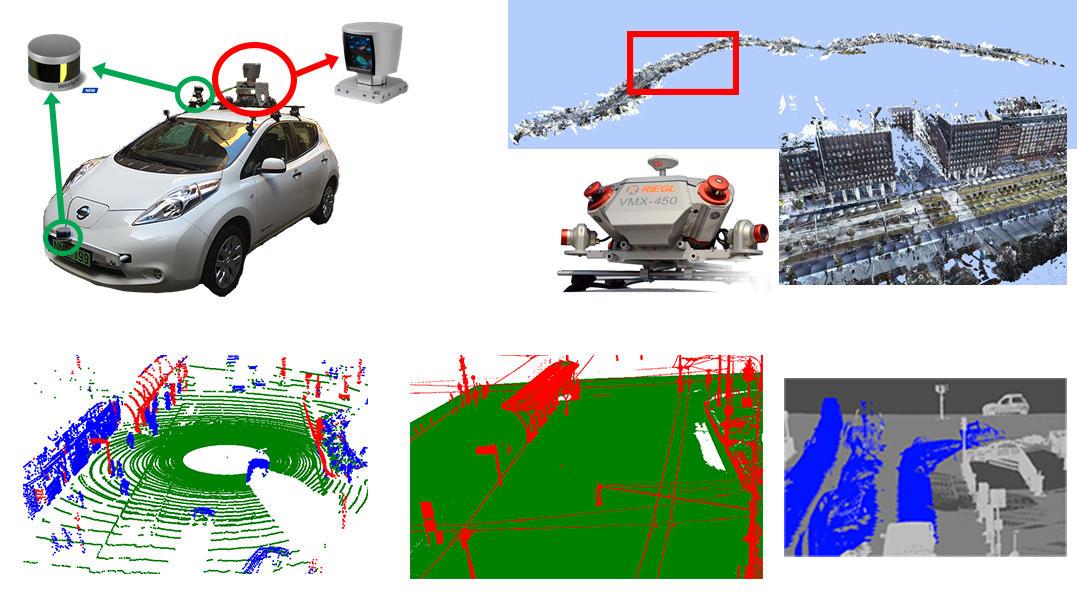

Up to date 3D sensors revolutionized the acquisition of environmental information. 3D vision systems of self driving vehicles can be used for -apart from safe navigation- real time mapping of the environment, detecting and analyzing static (traffic signs, power lines, vegetation, street furniture), and dynamic (traffic flow, crowd gathering, unusual events) scene elements. The onboard 3D sensors - Lidar laser scanners, calibrated camera systems and navigation sensors - record high frame-rate measurement sequences, however due to their limited spatial resolution, various occlusion effects in the 3D scenes, and the short observation time caused by the vehicle's driving speed, environment analysis purely based on onboard sensor measurements exhibits significant limitations.

The new generation geo-information systems (GIS) store extremely detailed 3D maps about the cities, consisting of dense 3D point clouds, registered camera images and semantic metadata. Here great challenges are also present yet, due to the large expenses of the environment scanning missions, the cost of evaluation of the tremendous data quantity, implementation of quick querying, and efficient updating of the semantic databases.

The main goal of the project is to facilitate the joint exploitation of the measurements from the cars' instant sensing platforms and offline spatial database content of the newest GIS solutions. We propose a new algorithmic toolkit which allows self driving cars to obtain in real time relevant GIS information for decision support, and provides opportunities for extending and updating the GIS databases based on the sensor measurements of the vehicles in the everyday traffic.